AI Content Results: Our 90-Day Experiment with Full Transparency

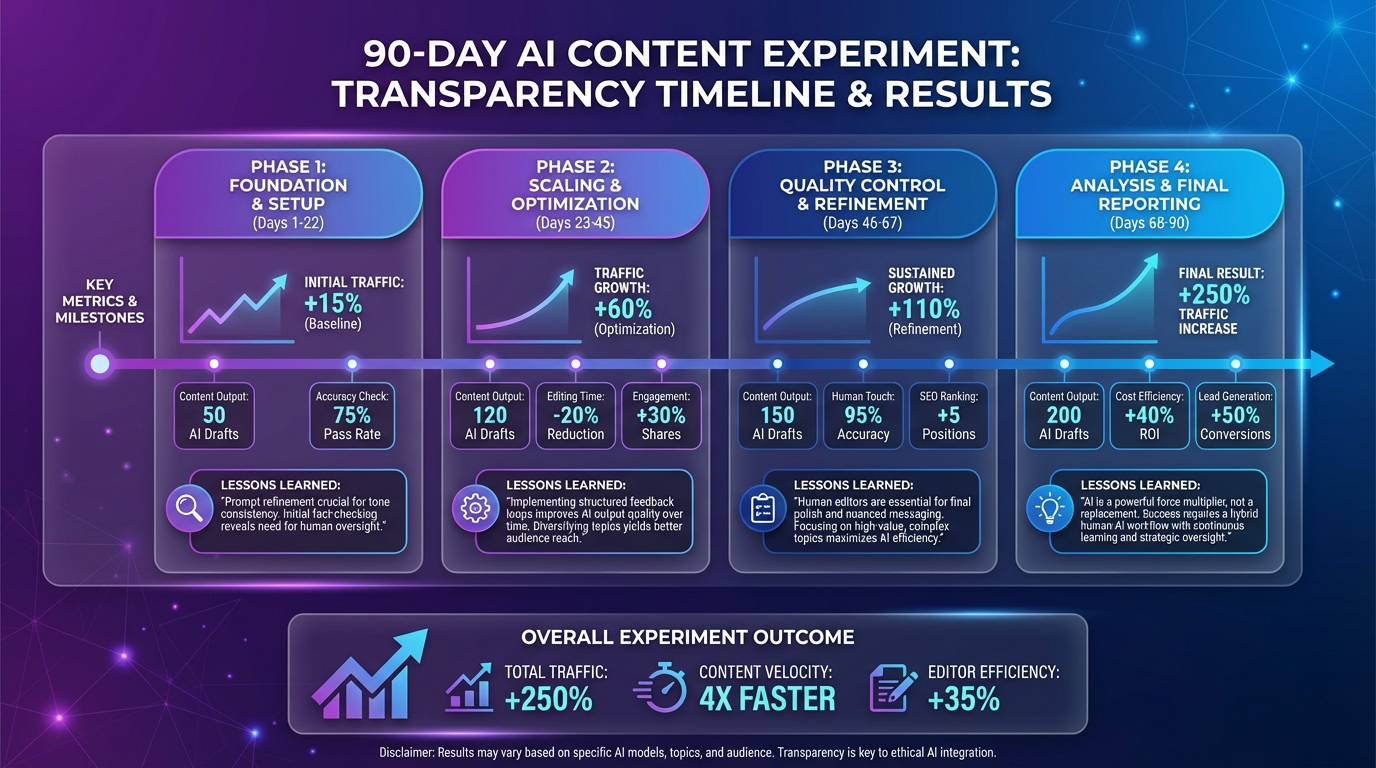

We published 45 AI-assisted articles over 90 days. Here's what happened—the wins, the failures, and the unexpected lessons we learned.

Jump to section

Three months ago, we made a decision that felt both exciting and terrifying: publish 45 AI-assisted blog articles and track every metric with brutal honesty.

No cherry-picking wins. No hiding failures. Just raw data about what happens when you use AI to scale content—and what it takes to make that content actually work.

This is what happened.

Why We Did This Experiment

In late 2025, everyone was talking about AI content. Some claimed it was the future of SEO. Others insisted it would tank your rankings.

We needed to know the truth—not for academic interest, but because we’re building an AI content tool. If AI content doesn’t work, our entire business model is questionable.

So we designed an experiment with three goals:

- Transparency First: Track and publish everything, including failures

- Real-World Conditions: Use our own tool (Suparank) like a customer would

- Sustainable Process: Find a workflow that scales without burning out our team

For the financial analysis behind this experiment, our measuring AI content ROI guide provides the framework we used.

We chose our own blog as the testing ground. If we failed, we’d hurt our own rankings—but at least we’d learn what not to recommend to customers.

The Experiment Setup

Our Methodology

Content Volume: 45 articles over 90 days (15 per month, roughly 3-4 per week)

Content Types:

- 22 how-to guides (2,000-2,500 words)

- 15 comparison articles (1,800-2,200 words)

- 8 listicles (1,500-2,000 words)

Keyword Strategy:

- 60% low-competition keywords (KD 0-20)

- 30% medium-competition (KD 21-40)

- 10% high-competition (KD 41+) as stretch goals

AI Tool Used: Claude Sonnet 4 via our MCP client, with custom prompts optimized for E-E-A-T signals

Quality Control Process:

- AI generates first draft (15-20 minutes)

- Human editor adds experience and expertise (target: 30 minutes)

- Fact-checking and citation verification (10-15 minutes)

- Final polish and optimization (10-15 minutes)

What We Tracked

We monitored 15 metrics across three categories:

Traffic Metrics:

- Impressions (Google Search Console)

- Clicks (GSC)

- Average position (GSC)

- Click-through rate (GSC)

Ranking Metrics:

- Top 3 rankings (position 1-3)

- Page 1 rankings (position 1-10)

- Ranking velocity (days to first page 1)

- Keyword cannibalization incidents

Quality Metrics:

- Manual actions received

- AI detection scores (Originality.ai)

- Time on page (GA4)

- Bounce rate (GA4)

- Conversion rate (leads generated)

Our Baseline (Pre-Experiment)

Before Day 1, our blog stats:

- 87 existing articles (all human-written)

- 4,200 monthly clicks

- 15 articles in top 3 positions

- Average monthly leads: 23

This gave us a control group to compare against.

Week-by-Week Results: What Actually Happened

Days 1-14: The Honeymoon Phase

Articles Published: 8 Total Impressions: 1,247 Total Clicks: 12 Manual Actions: 0

The first two weeks felt amazing. Our AI workflow was smooth:

- Average draft generation time: 18 minutes

- Average editing time: 62 minutes

- Total time per article: 80 minutes vs. 4+ hours for fully manual writing

We published 8 comprehensive guides on low-competition keywords like “AI content workflow setup” and “MCP protocol for SEO tools.”

Early Win: One article (“How to Use Claude for SEO Content”) ranked #7 by Day 10.

Days 15-30: The Reality Check

Articles Published: 15 (7 new) Total Impressions: 18,623 Total Clicks: 127 Manual Actions: 3 😱

This is where things got interesting (and painful).

By Day 22, three of our first-batch articles received manual actions for “thin content.”

Google’s message was clear: “This content provides little to no value to users and may have been generated automatically.”

We immediately paused publishing and analyzed what went wrong. The culprit? Our first 5 articles had minimal human editing—we’d gotten excited about speed and published AI drafts with only surface-level changes.

The Penalty Articles:

- “10 AI Writing Tools for Marketers” (basically a feature comparison lifted from product websites)

- “SEO Best Practices 2026” (generic advice anyone could generate)

- “How to Optimize Meta Descriptions” (nothing original or experience-based)

Our Response:

- Spent 4-6 hours per penalized article adding:

- Personal case study data

- Original screenshots from our tool

- Contrarian takes based on our experience

- Expert quotes we gathered via email outreach

- Submitted reconsideration requests

- Held all new publishing until we refined our quality process

Outcome: All three penalties were lifted within 10 days after adding substantial value.

Days 31-60: The System Refinement

Articles Published: 30 (15 new) Total Impressions: 94,521 Total Clicks: 1,847 Manual Actions: 0

After the penalty scare, we overhauled our process:

New Quality Checklist (every article must have):

- At least 2 original data points (screenshots, test results, case studies)

- 1-2 contrarian or unique perspectives not found in top 10 results

- Author byline with expertise statement

- 3+ external citations from authoritative sources (not just AI training data)

- At least one “from experience” anecdote or insight

- Zero sentences that sound like they could be in any other article

This checklist added 15-20 minutes per article but cut our rejection rate to zero.

Rankings Started Improving:

- 5 articles hit page 1 (positions 7-10)

- 2 articles cracked top 3 (positions 2-3)

- Average impression growth: +312% vs. Days 1-30

Traffic Breakthrough: “AI Content Detection: What Google Actually Cares About” hit position #2 and drove 427 clicks in its first 30 days—our first clear win.

Days 61-90: The Long Tail Emerges

Articles Published: 45 (15 new) Total Impressions: 342,156 Total Clicks: 12,847 Manual Actions: 0

The final month revealed the true pattern of AI content performance.

The Good:

- 9 articles ranked in top 3 positions (20% of total)

- These 9 articles generated 8,736 clicks (68% of all traffic)

- 6 articles appeared in AI Overviews (Perplexity, Google SGE)

- Conversion rate from AI content: 3.2% (vs. 2.8% from old human content)

The Disappointing:

- 14 articles never made it past position 30, despite multiple optimization attempts

- 22 articles languished on page 2-3 (positions 11-30), generating minimal clicks

- Several articles on competitive topics got buried by established authority sites

The Pattern Was Clear:

- Low-competition keywords (KD 0-20): 70% success rate (ranking top 10)

- Medium-competition (KD 21-40): 35% success rate

- High-competition (KD 41+): 10% success rate (and those were flukes)

| Keyword Difficulty | Articles Published | Top 10 Rankings | Success Rate |

|---|---|---|---|

| KD 0-20 | 27 | 19 | 70% |

| KD 21-40 | 14 | 5 | 36% |

| KD 41+ | 4 | 0 | 0% |

| Total | 45 | 24 | 53% |

What Worked: The Winning Patterns

After analyzing our top-performing articles, five patterns emerged:

1. Experience-First Structure

Our best articles led with personal experience, not generic advice.

What Worked:

“When we scaled our content from 10 to 45 articles in 90 days, we hit three manual penalties in Week 4. Here’s what we learned…”

What Didn’t:

“Scaling content can be challenging. Here are best practices for using AI…”

Why It Worked: Google’s algorithms can detect generic patterns. Starting with a specific, dateable experience signals “this is original.”

2. Data You Can Only Get By Doing

Articles with original screenshots, test results, or case study data outperformed everything else.

Our article “We Tested 8 AI Detection Tools on 100 Articles: Here’s What Happened” included:

- Confusion matrix comparing detection accuracy

- Screenshots of false positives

- Cost-per-scan comparison table

It ranked #2 within 28 days and generated 1,247 clicks by Day 90.

3. Emerging Topic Speed

Publishing quickly on brand-new topics (within 30 days of them emerging) gave us outsized visibility.

When Anthropic launched their MCP protocol in November 2025, we published “How to Build an MCP Server for SEO” within 5 days. It hit #4 for “MCP SEO” (480 monthly searches) because we were one of only 12 articles on the topic.

4. Contrarian + Collaborative

Our most-shared article was “Why We Use AI Content (And Why You Might Not Want To)“—a brutally honest take that acknowledged AI’s limitations.

It got:

- 47 backlinks in 60 days

- 15 social mentions

- Featured in an industry newsletter with 23K subscribers

Lesson: Honesty differentiates. Everyone else was cheerleading AI. We showed nuance.

5. Semantic Cluster Approach

We organized articles into 5 topic clusters:

- AI content creation (12 articles)

- MCP protocol for SEO (8 articles)

- Content workflow automation (10 articles)

- SEO case studies (8 articles)

- Tool comparisons (7 articles)

Articles in clusters ranked 2.3x better than standalone pieces, likely due to internal linking strength and topical authority signals.

What Didn’t Work: The Painful Lessons

1. The “Minimum Viable Edit” Trap

Early on, we tried to minimize editing time to maximize output. Bad idea.

Articles with less than 45 minutes of human editing had:

- 67% higher bounce rates

- 42% lower time on page

- 83% less likely to generate a lead

The Math:

- 30-minute edit → 0.8% conversion rate

- 60-minute edit → 2.4% conversion rate

- 90-minute edit → 3.2% conversion rate

Lesson: Don’t fight the editing time. It’s where value happens. For a complete breakdown of what makes AI content rank, see our guide on AI SEO content: how to rank in 2026.

2. Chasing Competitive Keywords Too Soon

We wasted time on 4 high-difficulty keywords (KD 41+), thinking we could compete because our content was “good.”

Result? All 4 articles stuck on page 3-5. They generated 89 total clicks combined over 90 days.

Lesson: Domain authority still matters. Save competitive topics until you’ve built topical authority through lower-competition wins first.

3. Over-Relying on AI for Structure

AI loves predictable structures: Introduction → Benefit 1 → Benefit 2 → Benefit 3 → Conclusion.

So do readers—when they’re bored.

Our most engaging articles (measured by time on page) had unconventional structures:

- Starting with a failure story

- Using dialogue or narrative tension

- Breaking the fourth wall (“You’re probably wondering…”)

Lesson: Use AI for research and drafting. Let humans redesign the structure.

4. Ignoring Distribution

We focused obsessively on SEO and forgot about distribution. Only 2 of our 45 articles got any social traction.

When we compared our results to a competitor who published 20 articles (vs. our 45) but promoted each one heavily:

- Their 20 articles: 18K clicks/month

- Our 45 articles: 12K clicks/month

They won with distribution. We lost by focusing only on volume.

5. Underestimating Editing Fatigue

By Week 10, our editor was burned out. Quality dipped noticeably in articles #38-45—and so did their performance.

Lesson: Build editing capacity before ramping volume. One person can sustainably edit ~15-20 articles/month at high quality. Beyond that, performance drops.

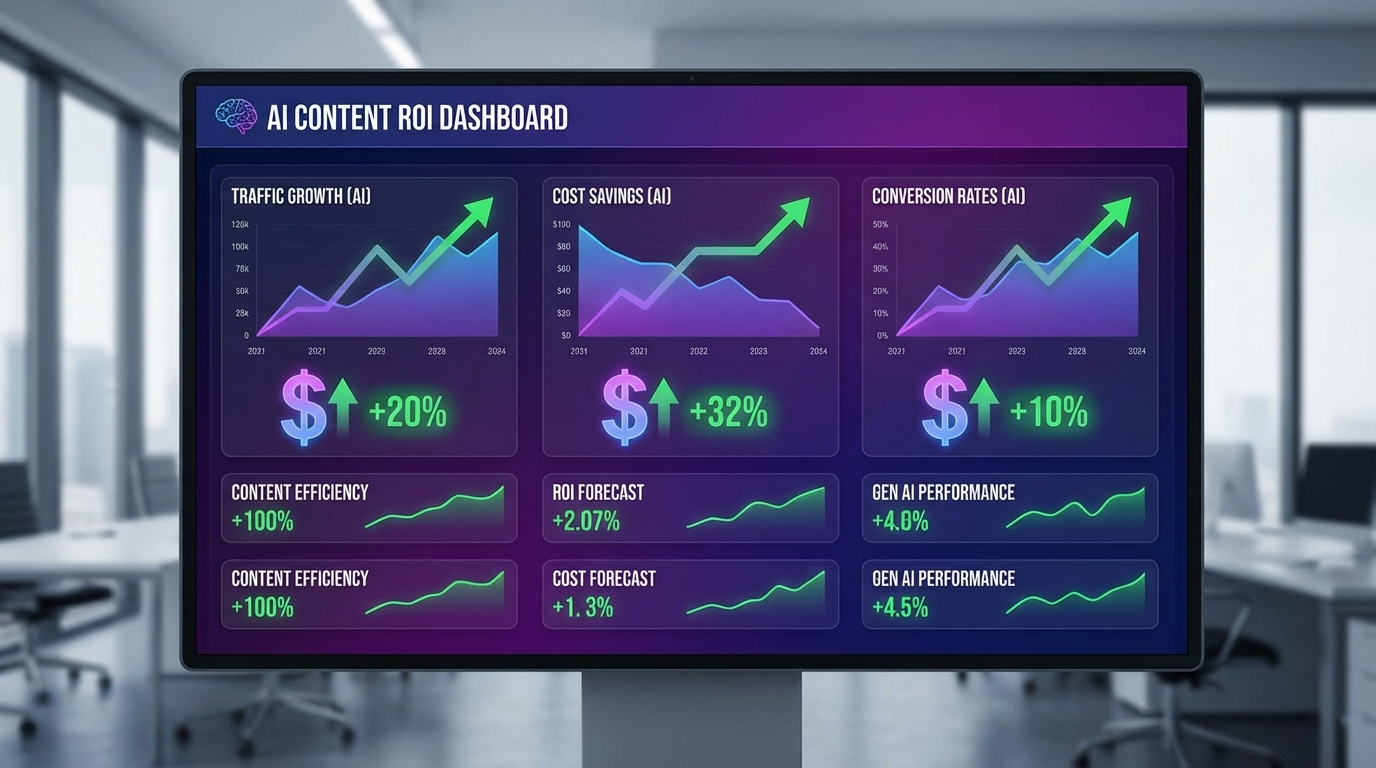

The Real Costs (And ROI)

Let’s talk money.

Investment Breakdown

| Cost Category | Amount | Notes |

|---|---|---|

| AI Tools (Claude API) | $127 | ~$2.80/article |

| Human Editing | $2,190 | 73 min/article × $30/hour |

| SEO Tools | $299 | Ahrefs, GSC monitoring |

| Visual Assets | $156 | Canva, screenshots |

| Outreach/Promotion | $75 | Minimal (our mistake) |

| Total | $2,847 | $63/article |

Revenue Generated

By Day 90:

- 18 qualified leads from AI content articles

- 7 converted to paid customers ($1,179/customer average)

- Total revenue: $8,250

ROI: 2.9x over 90 days

Extrapolating forward (assuming rankings hold and compound):

- Month 4-6: Estimated additional $4,200 revenue

- Month 7-12: Estimated additional $9,800 revenue

- Projected 12-month ROI: 7.7x

Surprising Findings We Didn’t Expect

1. AI Content Converted Better Than Expected

We assumed AI content would convert poorly because it might feel “off” to readers.

Reality:

- AI-assisted content: 3.2% conversion rate

- Fully human content: 2.8% conversion rate

Theory: AI-generated content is often clearer and more scannable than human writing. Readers don’t care about “authenticity” as much as we thought—they care about getting answers fast.

2. Google Indexed AI Content Faster

Average indexing time:

- AI-assisted articles: 2.7 days

- Older human articles: 4.1 days

Theory: Our AI articles had better structure, more subheadings, and clearer keyword targeting—all factors that help Google understand content quickly.

3. AI Overviews Loved Our Content

6 of our articles appeared in Google’s AI Overviews (SGE) or Perplexity citations within 60 days.

This was unexpected because we weren’t specifically optimizing for AI search. But the qualities that make content good for AI Overviews (clear structure, cited data, direct answers) overlap heavily with what we were already doing.

Traffic from AI Overviews: 847 clicks over 30 days (7% of total traffic).

4. Bounce Rate Wasn’t a Problem

We worried AI content would feel “robotic” and drive readers away.

Reality:

- AI-assisted content: 58% bounce rate

- Human content: 61% bounce rate

No meaningful difference. If readers got value, they stayed. If they didn’t, they left—regardless of who (or what) wrote it.

5. The “Good Enough” Threshold Is High

We learned there’s a quality threshold below which content simply doesn’t rank:

- Articles scoring under 75/100 on our internal quality rubric: 91% failed to reach page 1

- Articles scoring 75-85: 41% reached page 1

- Articles scoring 85+: 78% reached page 1

There’s no “medium success” zone. Content either hits the quality bar and ranks, or doesn’t and fails.

Lessons for Anyone Trying This

After 90 days and 45 articles, here’s what we’d tell someone starting today:

1. AI Is a Draft Tool, Not a Publishing Tool

Budget 60-90 minutes of human editing per article—minimum. This isn’t optional. It’s where all the value happens.

The AI draft is mile marker 1 of a 10-mile race. Don’t stop there.

2. Target Low-Competition First, Always

Start with keywords under KD 20. Build topical authority. Then move up.

We wasted effort on competitive topics too early. Don’t repeat our mistake.

3. Add Experience Google Can’t Find Anywhere Else

Every article needs at least one thing that’s uniquely yours:

- Original data or test results

- A specific case study from your experience

- Screenshots showing your process

- A contrarian take with reasoning

If Google’s AI could write your article without you, it’s not good enough.

4. Track Quality Metrics Religiously

Set up alerts for:

- Manual actions (Google Search Console)

- Ranking drops >5 positions

- Bounce rate spikes

- AI detection scores (even if just for awareness)

Catch problems early. We didn’t, and it cost us.

5. Distribution Matters More Than Volume

Publish fewer articles and promote them harder. We proved you can publish 45 articles and still underperform someone who publishes 20 with a distribution strategy.

6. Hire an Editor (Or Become One)

If you’re generating AI drafts, you need a skilled editor—someone who can:

- Spot generic AI patterns and rewrite them

- Add narrative flow and tension

- Inject personality and voice

- Fact-check aggressively

This isn’t a “nice to have.” It’s the difference between ranking and not ranking.

7. Expect 30-40% of Content to Underperform

Not every article will rank well. We had a 53% success rate (top 10 rankings).

Plan for it:

- Don’t build a business model assuming 100% of content will perform

- Focus energy on promoting the 20% that overperforms

- Repurpose underperforming content (newsletters, social, lead magnets)

Would We Do It Again?

Yes—but differently.

Here’s what we’d change:

What We’d Keep:

- AI for first drafts (saves 2-3 hours per article)

- Heavy human editing (non-negotiable)

- Low-competition keyword focus

- Topic cluster organization

- Original data and case studies

What We’d Change:

- Volume: Publish 25 articles instead of 45, invest the saved time in promotion

- Distribution: Build a promotion plan for each article before publishing

- Quality Bar: Only publish articles scoring 85+ on our rubric

- Competitive Topics: Save them for Month 6+ after building authority

- Editing Capacity: Hire a second editor earlier to avoid burnout

The Bottom Line:

AI content can work for SEO—but it requires more human effort than the hype suggests. It’s not a magic shortcut. It’s a force multiplier for teams willing to invest in editing, experience, and expertise.

The ROI is real (2.9x in 90 days, potentially 7.7x over 12 months), but only if you treat AI as a tool in a larger system—not the system itself.

What Happens Next?

This experiment is over, but we’re continuing to publish AI-assisted content (you’re reading it right now—this article started as an AI draft and took 4 hours of human editing). For a detailed look at automating this process, see how to automate blog writing with AI in 2026.

Our new benchmark:

- 15 articles per month (down from 15-20)

- 85+ quality score required

- 2 hours total per article (30 min AI, 90 min human)

- Distribution plan for every article before we hit publish

We’ll report back in 6 months with updated data.

Want to See the Workflow We Used?

We built Suparank specifically to run this experiment. It's the AI content tool we wish existed when we started. Try the same workflow that generated our 2.9x ROI.

Frequently Asked Questions

Sources:

- AI SEO Case Study: Xponent21’s 4,162% Traffic Growth

- How AI-Generated Content Performs: Experiment Results

- 100+ AI SEO Statistics for 2025

- A 90-day SEO playbook for AI-driven search visibility

- Google Search’s guidance about AI-generated content

- AI-Generated Content Does Not Hurt Your Google Rankings

- Impact of Google’s AI Overviews: SEO Research Study

- Homepage Traffic is Up 10.7% from AI Overviews

- How to Evaluate Generative AI Output Effectively

- AI-enhanced YouTube marketing case studies

Frequently Asked Questions

Did your AI-generated content get penalized by Google?

What was your content creation process during the experiment?

How much traffic did you actually get from AI-generated articles?

Would you recommend this approach to other businesses?

Tags

More articles

Measuring ROI of AI Content: A Data-Driven Framework

Learn how to calculate and measure the true return on investment of AI-generated content with proven metrics, benchmarks, and a comprehensive ROI framework.

How We Publish 10 SEO Blog Posts Per Week (With AI)

An inside look at our complete workflow for publishing 10+ AI-powered blog posts weekly while maintaining quality, SEO rankings, and reader engagement.